This is topic You are not a filmmaker, unless you are shooting and editing real film in forum 8mm Forum at 8mm Forum.

To visit this topic, use this URL:

https://8mmforum.film-tech.com/cgi-bin/ubb/ultimatebb.cgi?ubb=get_topic;f=1;t=010795

Posted by Raleigh M. Christopher (Member # 5209) on June 27, 2016, 01:44 PM:

It's an analog world.

Your ears. Your Eyes.

Sound waves. Light waves.

Records. Film.

NOT DIGITAL

If you're not shooting and editing real film, you can call yourself a moviemaker, and say you make movies, but don't call yourself a filmmaker, and don't say you make films.

Because you don't.

Be a Filmmaker, not a Videomaker

Posted by Tom Spielman (Member # 5352) on June 27, 2016, 03:22 PM:

Not sure if you're familiar with the app and the website called "NextDoor". It's a social media site for people that live in close proximity to each other. Anyway, someone posted on it this weekend that they were going through some of their old stuff and found a Canon 35mm SLR that they had left the batteries in for about 10 years. ![[Wink]](wink.gif)

They were going to throw it away but wanted to see if anyone wanted it before doing so. I said I'd take it. The battery door was trashed but with some aluminum foil and duct tape I was able to get the camera working. It even came with a roll of (expired) film and a couple of decent lenses. The camera itself is not a great find. You can get working versions for $20.

We went for a walk by the lake last night with my daughter, two dogs, and the camera. The 24 shots were taken and I actually found a drug store that claims 1 hour film processing so I'll pick up the CD after work and see what we got.

My family just rolled their eyes when I picked this camera up. My kids can't fathom why I'd want this when we have a perfectly good DSLR that lets you take as many pictures as you want without paying for them and allows you to see them instantly. It even focuses itself, has a built in flash and you don't have to worry about having the wrong film for the light. It also takes movies which the old SLR can not do.

But they kind of got into taking pictures with the old camera and are eager to see how they turn out.

In the end both film and digital video are human creations. Neither is any more natural than the other. Film is plastic after all and in fact is now produced with the use of robots and computer controlled processes.

Enjoy them both for what they have to offer.

Posted by Raleigh M. Christopher (Member # 5209) on June 27, 2016, 06:10 PM:

I can't agree with you. So many people are apologists for digital. With film, you are actually recording the effects of lightwaves. It IS more natural. Digital is NOT natural, at all. It's merely a computer's approximation/interpretation of reality, while film actually records the reality of the effects of lightwaves, just as a record is a physical representation of a soundwave.

The world is analog by nature. The way your eyes and ears see and hear is an anlog organic process. Little 0's and 1's are not floating around in the atmosphere. Digital and Analog are not equals, in any way, shape or form. Analog will always be more natural and superior to the harsh, artificiality of digital.

Think of a shadow. It's a bright sunny day, and there is a tree standing next to your house. The light from the sun hits the tree, and an image of that tree is projected onto the wall of your house. It's not in color it's merely a shadow - light versus an absence of light. Never the less, an image is created, an impression left. This is a wholly natural process. Think of the shadow as like black-and-white film. It's not in color but nevertheless the image, or an impression, is left behind. This is the same way that film works. These colored dyes and silver hallide crystals are sensitive to light waves and an image or an impression of those light waves are left on the film. With digital it is a completely artificial process. A computer chip merely interpreting reality, spitting out "000 11 01001 11110001 00000 111111" etc. There is no way it can be argued that film is just as artificial as digital.

Your ears hear because a little bone in your head vibrates when sound waves hit it. Just like a stylus vibrates to cut a groove into a master platter to record a soundwave. Conversly, the stylus vibrates from the physical representation of the soundwave to recreate a real, natural soundwave.

Posted by Tom Spielman (Member # 5352) on June 28, 2016, 01:15 AM:

I recommend you do a few google searches using questions like: "Is the brain analog or digital?", "Is the body analog or digital?", or "Is life analog or digital?"

You might be surprised at the results. Your body uses electrical signals to communicate information from one part to another or to store it. There are both analog and digital components to these transmissions. The are some neuroscientists that think that certain parts of the brain work more digitally while others work in analog.

So no, analog isn't more "natural" than digital. Our brains don't use binary computation or anything like that. But using "on" and "off" as meaningful states is very much a part of our nervous system just like it is in the digital world.

Either way, film is a technology used to store the patterns of light that passed through a lens at a certain place and time. It stores them in a way that can be used to create a small scale simulation of those same light patterns later on. But it is very much a man made technology.

In digital camcorders, a CMOS sensor, wiring, CPU, and flash storage do the same thing. Arguably more like the human iris, optic nerve, and brain would do it (while still being very different).

A film projector is a very mechanical beast and for movies in particular there is nothing natural or even analog about 24 frames per second. It's close enough for eyes and brains to interpret it like we would the natural world with the help of our imaginations but anyone can tell the difference.

Our eyes and brains don't really work in fps but faster frame rates start to look less like a movie to us and more real. Some people are attached to 24 fps, not because it's more natural, but because it's more "cinematic". It looks like what we are used to thinking of when we think of a movie.

The "natural" argument really falls flat for me when you think about what movies largely replaced, - live theater. Imagine someone arguing about how "natural" film is to a proponent of live theater back in the 20's.

[ June 28, 2016, 09:08 AM: Message edited by: Tom Spielman ]

Posted by Raleigh M. Christopher (Member # 5209) on June 28, 2016, 09:47 AM:

I still don't agree with you. Analog is and always will be a natural way of recoding and reproducing light and soundwaves. Digital is not. And our bodies are not digital. That is prepostorous nonsense made up by digital apologists.

Film is man made, but the process is natural.

Of course live theatre is even more faithful to reality. Those are real live flesh and bone human beings walking around in space. That doesn't negate the artificiality of digital video.

Analog, as a concept, takes in all information. Digital by it's very nature lacks and cannot display all information. Think of a an analog clock versus a digital clock. The sweep of the second hand as it moves from one second to the next, covers to infinity, evey division of time. With a digital clock, it is IMPOSSIBLE. You can only extend the decimal place out another step. And no matter how far out the decimal place is extended, it will always lack. Forever.

Posted by Tom Spielman (Member # 5352) on June 28, 2016, 10:07 AM:

How is the capture of individual images on film or the staccato and clipped motion of a film projector analog?

Posted by Raleigh M. Christopher (Member # 5209) on June 28, 2016, 10:17 AM:

The argument is about the process and theory of recording lightwaves and soundwaves. Physical representation and reproduction through physical means. That is what analog is.

Posted by Tom Spielman (Member # 5352) on June 28, 2016, 01:29 PM:

I think we are using very different definitions for analog. ![[Smile]](smile.gif)

To me "analog" refers to something much more specific than just physical representation and reproduction.

You can have electronic analog computers. You can have both analog and digital magnetic tape they will physically look the same. You could digitally record on vinyl but there are practical reasons for not doing so.

Yes light waves are analog and a digital means of recording them over time doesn't do so perfectly. But neither does using silver halide to produce a series of still images. That's a very discrete process and not analog at all.

Posted by Raleigh M. Christopher (Member # 5209) on June 28, 2016, 01:36 PM:

Yes, it is a completely analog process, because those silver halide cyrstals are light sensitive, and change physically when light hits them the first time. When white light is then passed over them again in reverse, the original light waves that changed the crystals in the first place are recreated.

Posted by William Olson (Member # 2083) on June 28, 2016, 02:56 PM:

...And the debate rages on. It IS an analog world. Moviemakers who use digital are videomakers and not filmmakers.

Posted by Ty Reynolds (Member # 5117) on June 28, 2016, 03:42 PM:

So filmmakers are moviemakers, and videomakers are also moviemakers? I'm glad we cleared that up.

And that noise my phone makes is not a dial tone.

Posted by Raleigh M. Christopher (Member # 5209) on June 28, 2016, 03:57 PM:

Moviemakers can be either a Filmmaker or a Videomaker, but a videomaker is not a filmmaker, or vice versa.

Posted by Dominique De Bast (Member # 3798) on June 28, 2016, 04:01 PM:

Film and video are two different things. The only question I ask myself is : which one gives pleasure. I let you guess my answer ![[Smile]](smile.gif)

Posted by Tom Spielman (Member # 5352) on June 28, 2016, 06:04 PM:

quote:

Yes, it is a completely analog process, because those silver halide cyrstals are light sensitive, and change physically when light hits them the first time. When white light is then passed over them again in reverse, the original light waves that changed the crystals in the first place are recreated.

The original light waves are approximated and that is true whether it's an analog process or a digital one. It's the methods that differ. Both rely on light sensitive materials. With film, the light causes chemical reactions. In the case of digital cameras, it's an electrical reaction.

The reason I'm saying that capturing movies on film isn't analog (the way I define it) is because you aren't capturing the light continuously. You are capturing it at specific intervals, - like 24 frames per second. It very much resembles the way analog audio is digitized. In that case you're sampling the audio signal every so many thousandths of a second. With film you're sampling the light every 1/24th of a second. In that way filming is more like a digital process. Note that I didn't say binary. I said digital.

Posted by William Olson (Member # 2083) on June 28, 2016, 07:57 PM:

Let's just say film is non-digital since it isn't ones and zeros.

Posted by Tom Spielman (Member # 5352) on June 28, 2016, 10:21 PM:

I think where I'm coming at this differently is that I see film more as a technological marvel rather than as a product of a natural, organic, and analog process.

Does the fact that digital images are stored as a series of ones and zeros make them intrinsically less satisfying in some way? I don't know if that's quite it.

Decades ago I used to work with a guy who lived through a punched card to hard drive transition at his company. At that time there was a man working there who was quite unhappy about it. He could physically pull a punched card out of deck and read it. He could manually sort them. He'd no longer be able to do that with records stored on magnetic drives.

Punched cards are digital/binary representations of information but you can see and touch them.

Posted by Raleigh M. Christopher (Member # 5209) on June 29, 2016, 07:20 AM:

How about a still photogtaph taken on medium format film. Are you going to claim that is "digital" too?

I need to understand how you personally are defining analog.

Here is one definition of analog:

"Home : Technical Terms : Analog Definition

Analog

As humans, we perceive the world in analog. Everything we see and hear is a continuous transmission of information to our senses. This continuous stream is what defines analog data. Digital information, on the other hand, estimates analog data using only ones and zeros.

For example, a turntable (or record player) is an analog device, while a CD player is digital. This is because a turntable reads bumps and grooves from a record as a continuous signal, while a CD player only reads a series of ones and zeros. Likewise, a VCR is an analog device, while a DVD player is digital. A VCR reads audio and video from a tape as a continuous stream of information, while a DVD player just reads ones and zeros from a disc.

Since digital devices read only ones and zeros, they can only approximate an audio or video signal. This means analog data is actually more accurate than digital data. However, digital data can can be manipulated easier and preserved better than analog data."

The last half of the last statement about digital data being able to be preserved "better" than analog data is highly suspect and dubious, however.

http://techterms.com/definition/analog

Posted by Paul Adsett (Member # 25) on June 29, 2016, 11:06 AM:

quote:

The last half of the last statement about digital data being able to be preserved "better" than analog data is highly suspect and dubious, however.

Based on my experience with DVD disc rot of many of my purchased DVD'S, I would definitely agree with that statement.

Posted by Osi Osgood (Member # 424) on June 29, 2016, 12:21 PM:

That's an interesting thought. I guess you'd have to define what "film" means. Is "film" an actual piece of celluloid, or is "film" referring to a storyline, as many people consider the term "film".

If it's in the "story-line' vein, then yes, digital can be considered film, or be used in the expression, "making a film" ....

... without film. ![[Smile]](smile.gif)

Posted by Tom Spielman (Member # 5352) on June 29, 2016, 12:51 PM:

Raleigh: For the sake of argument lets define analog as continuous or continuously changing values and digital as exact values taken at discrete intervals.

In electronics an analog component might be producing constantly changing voltage levels in response to some sort of input while a digital component might turn "on" once the voltage reaches a certain threshold and "off" if the voltage falls below a certain threshold.

Or you could think of an analog clock vs a digital one. An analog clock with mechanical movement has hands that move constantly while a digital clock has a display that changes at precise intervals. There are hybrids of course. Which is more accurate? I understand why you might come to the conclusion that analog is but in general approximation falls more into the realm of analog than digital. Think of how you'd respond to someone who asks you the time if you're wearing a digital watch vs an analog one where the minute hand is partially between the 5 and the 6.

Accuracy in the digital realm depends on sampling rate. If you can raise the sampling rate high enough, it begins to look more continuous and it will exceed the level of accuracy that matters. It will exceed the level of accuracy you can perceive. The key issue then is storage and computational power.

Your eyes and ears can respond only so quickly to stimulus. It doesn't matter if that stimulus is the result of a digital or analog process. If that weren't true, movies wouldn't work. Movies on film do not feed your eyes continuously changing patterns of light. It sends a discrete image every 1/24th of a second, - a digital process.

I'll also suggest that the notion that we perceive the world in analog is highly debatable. For example, the blue cones in your eyes don't send a variable signal depending in the intensity of blue light striking them, they send pulses. If stimulated by enough blue light the neuron fires and the cone resets. That's more of a digital process than an analog one.

Posted by Raleigh M. Christopher (Member # 5209) on June 29, 2016, 01:42 PM:

I don't think we can have this debate if you keep insisting that the human body pervceives sound and lightwaves in a digital fashion. It's just absurd.

Also, in your comparison earlier between film and digital video, it seemed you were trying to make the case that analog was just as artificial as digital or that digital was somehow just as analog as film. The difference is that once the lightwaves hit the light sensitive dyes and crystals, and they are physically changed, the buck stops there. And you have an image, an impression, that can be read instantly, is perceivable instantly, by the human eye. CCD's measure light intensity, but then there is a chip saying "okay, I'm going to assign that one a value of 01, and the next 101, and the next 1110, then 0100001 etc. It's digital that is an approximation, not film. It's funny too because people who defend digital always make the "you can't perceive it anyway" argument, admitting that digital is incomplete and not natural.

And you never addressed the question of a still photograph. And how about the groove, the physical representation of the sound wave, literally, in a vinyl LP versus a digital file? Do our ears hear "digitally".

Yes both film and digital video operate with frames and it's the persistence of vision that creates the illusion of movement. But the argument is about the manner in which light and sound waves are recorded, and film being a more natural process of recording lightwaves with greater fidelity.

Also, you are mixing up terms digital and binary. Digital is 0's and 1's. Binary can be on or off, red or blue, black or white. You see the difference there?

Posted by Tom Spielman (Member # 5352) on June 29, 2016, 04:54 PM:

A still photograph is analog. ![[Wink]](wink.gif)

Binary numbers refers to a numeric system made up of of 1's and 0's. But, yeah it can also be two states like off an on.

Digital is not ones and zeros.

Digital says nothing about any particular number systems though we often associate it with binary. It's not an exact definition but think of analog as continuous vs digital as discrete. You can have 4 state systems and they'd still be digital.

If your definition of "digital" IS ones and zeros then I can see why you'd reject any notion that any part of us functions digitally. I'm not saying our eyes or brains represent the world in a binary fashion. I'm saying our eyes send signals that more closely resemble digital signals than analog signals, - discrete vs continuous.

And though you may not accept it, there is a lot of evidence that information in the brain is stored both digitally and in analog form.

I will leave you with this:

quote:

Neural coding

From Wikipedia, the free encyclopedia

Neural coding is a neuro-science related field concerned with characterizing the relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activity of the neurons in the ensemble.[1] Based on the theory that sensory and other information is represented in the brain by networks of neurons, it is thought that neurons can encode both digital and analog information.[2]

So, no I don't see analog as any more or less natural than digital

Posted by Raleigh M. Christopher (Member # 5209) on June 29, 2016, 08:23 PM:

In the context we are discussing, Diigital IS 1's and 0's.

Digital is an artificial way of recording lightwaves, and analog film is a natural way.

Lightwaves and Soundwaves are analog.

We'll never agree. And I'll always find digital inferior to analog on more than one level, and a majority of levels.

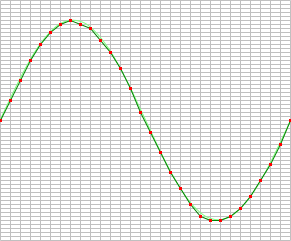

Graphic shown difference between Digital signal and Analog signal

Posted by Thomas Dafnides (Member # 1851) on June 29, 2016, 09:40 PM:

I find that film projection in the theater is more relaxing (or dream like) than digital projection in the theater, which somehow leaves you more tense. You some how detach more in film projection.

Posted by Raleigh M. Christopher (Member # 5209) on June 29, 2016, 09:44 PM:

That's because DCP and the look of digital is so harsh. No grain, and very plastic looking.

Posted by Tom Spielman (Member # 5352) on June 30, 2016, 12:12 AM:

Might be time for full disclosure. Part of my job over the last decade has involved capturing analog and digital data. Not sound or video and nothing that requires that level of resolution or sampling rate to faithfully reproduce.

What I do involves various types of sensors. I'm not sampling data at a rate of thousands of times per second, but I am sampling it over a period of weeks, months, and sometimes years which brings its own set of challenges.

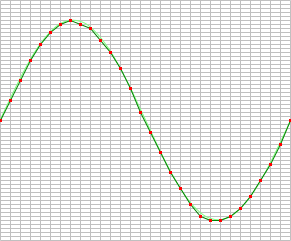

While the graph that Raleigh posted the link to is illustrative in how it shows the differences between analog and digital signals, it's not very representative in terms of accuracy if you were trying to capture audio or video. It's showing a very clean and smooth analog signal with a very poor digital sampling rate.

Below is a graph with better digital resolution:

As you improve the digital resolution, it will more closely follow the curve to the point where if it's audio for example, the human ear can't distinguish the difference. This has been confirmed in blind tests.

The other thing to remember is that imperfections exist and are introduced into the analog signal. When digitizing it's possible to eliminate that "noise" to give a truer representation of the actual analog information.

You're right Raleigh that we are destined to not a agree on a lot of things when it comes to analog vs digital. Here's what I will say though. While there are exceptions, In general it's not pleasing to look at a photo that's visibly pixilated. Nor is it pleasing to look at a picture that's too grainy. But a slight grain might actually be pleasing, whereas a slight pixelation is at best just OK.

Posted by Brian Fretwell (Member # 4302) on June 30, 2016, 04:16 AM:

Just a couple of ideas.

The main practical difference between analogue and digital signals is that analogue ones change when copied due to small non linearities in analogue media, digital ones (with checking for copy errors) don't. A film pirate's dream come true so copy protection has to be inserted.

As the graph shows the frequency of sampling and number of steps of level that are encoded decides the fidelity of the signal (low numbers in either/ both add quantisation noise when reconverted, as the signal will not exactly match the original). I don't want to get into the differential algebra involved it's been too long a time since I did Delta X and Delta Y in school. The steps between levels in the digital signal are ironed out by the digital - analogue converters so they have to be of the best quality, the reason add on converters became big business in the high end CD Hi-Fi world.

The high bit rate for the best in the digital world means almost all signals are compressed, often in a way that loss of several bits/bytes means a great disruption to the sound or picture quality. In digital MPEG2 TV this can lead to one picture with elements moving in the shape of the next shot for a second, a bit like the Predator in the film of the same name moving against the forest background.

What I have put is all a bit circular so the order in which I have put the above may not be the best - sorry. And no conclusions from me as I think both systems have their place.

Posted by Raleigh M. Christopher (Member # 5209) on June 30, 2016, 08:32 AM:

I think digital video has it's place too: It's great for the evening news, sports, porn (not being sarcastic at all), and just a lot of programming on television.

But I prefer my CINEMA on real bonafide analog FILM.

Visit www.film-tech.com for free equipment manual downloads. Copyright 2003-2019 Film-Tech Cinema Systems LLC

UBB.classicTM

6.3.1.2

![[Wink]](wink.gif)

![[Smile]](smile.gif)

![[Smile]](smile.gif)

![[Smile]](smile.gif)

![[Wink]](wink.gif)